Google rolled out the BERT algorithm update to its Search system last week (October 25, 2019). The main reason for this algorithm, designed to understand better what’s important in natural language queries, is a fundamental change. Google experts told it would impact 1 in 10 queries. Yet, many SEOs & Webmasters did not notice massive changes in Google rankings while this algorithm rolled out in Search over the last week that is October 25, 2019, as Google posted on its blog.

The question is, How?

The short answer is that the BERT update really was around understanding “longer, more conversational queries”.

Google is synonymously a digital encyclopedia with the difference that it keeps on updating and adding new inputs with every query it confronts.

Surprisingly even after so many algorithms, it is still facing issues sometimes with query anticipation or decoding the actual context of the Search. As per the data, around 15% of queries registered in a day are new and unsolved.

So, where does the difference lies?

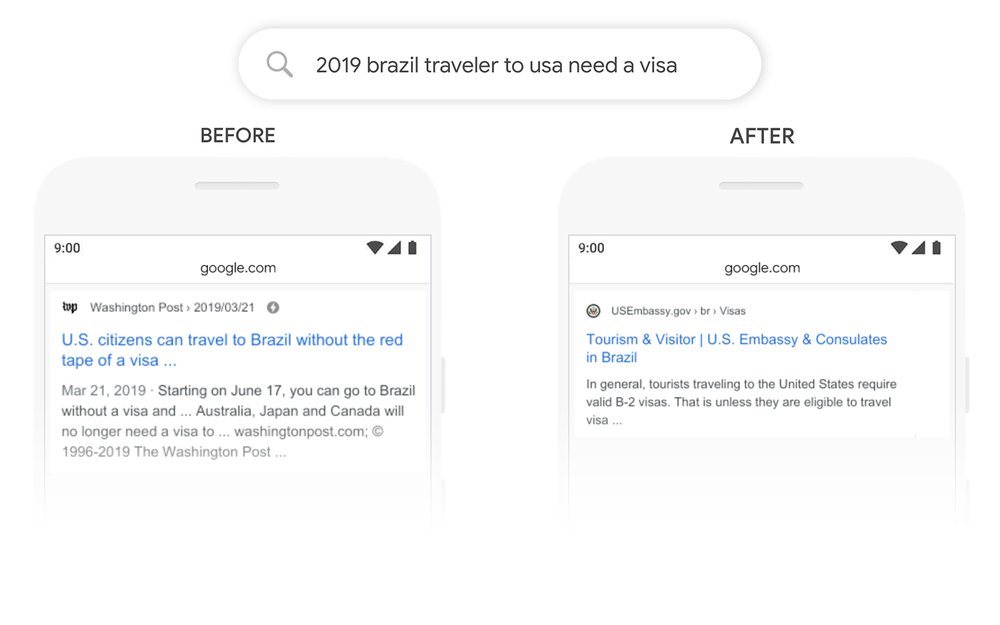

Source – Google Blog

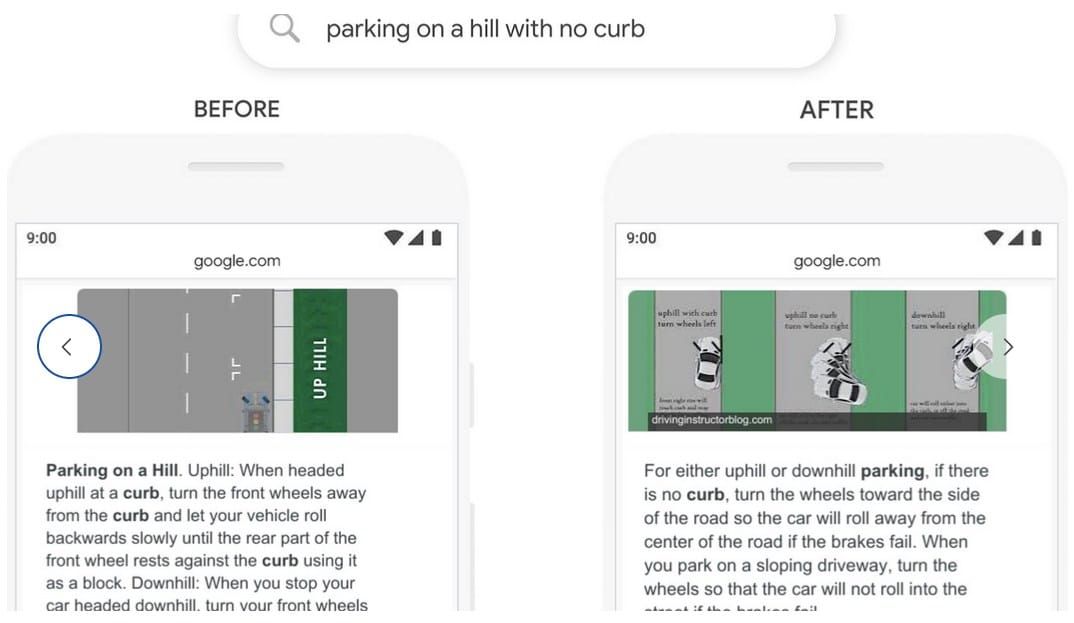

Source – Google Blog

Formulation, spelling, or sometimes it is the purpose of learning that enables us to use the exact terminology and henceforth create that gap.

For a machine, the heart of its Search is based on how we address or command our query. And with a discrepancy in its understanding or omission of words or incorrect formulation, meeting the gap becomes impossible.

Google is working on it, especially complex or conversational queries that are facing the majority of issues; and is trying to come up with something that reduces the disparity in machine language and human language.

And its breakthrough is witnessed with the evolution of BERT.

2018 was the big year, and it vouched for the evolution of BERT. An open-sourced of neural network-based technique for the purpose of Natural Language Processing (NLP); based on a pre-training technique called Transformer Bidirectional Encoder Representations BERT.

Technicalities can sound scary and make us ignore it, without knowing what is stored for you in it.

So, what is BERT?

In simple language, it is a breakthrough in the previous language model- transformer where words work concerning all the other words in a sentence, not one-by-one in sequence. Therefore, BERT models may consider the entire meaning of a term by looking at the words that come before and after it — particularly useful to understand the intent behind search queries.

BERT is exceptionally complex to apply and requires new supporting hardware like the latest Cloud TPUs that are being used for the first time to serve search results and quickly get you more relevant information.

Decoding queries:

BERT works on the primary task improvement of the Transformer, i.e. method of reading the text input; and requires a training language model. It phases a challenge, though, that is defining the prediction goal and deciding what should be next word in sequence.

BERT uses bilateral strategy to deal with it:

Masked LM: Masked language model has two elements “masked” words that decide the sequence of a predicted word and “non-masked” other words in context. The process followed by MLM to come up with a sequence is by:

- Classify encoder output

- Then output vectors are multiplied by the embedding matrix and transformed into the vocabulary dimension.

- Calculate the probability of each word in the SoftMax vocabulary.

As per the results, BERT only optimizes the prediction of masked values ignoring non-masked value predictions (not all) that increase context-awareness.

Next Sentence Prediction (NSP): BERT receives queries consisting of more than one sentence generally two. The model receives sentence pairs as input and learns to predict whether the second sentence in the pair is the following sentence in the original document.

Only half of the sentences turn out to be subsequent to the initial one. And hold a completely different context from the previous one.

The input is interpreted in the way before entering the model to help the system differentiate between the two sentences in training:

- At the beginning of the first sentence, a [CLS] token is inserted and at the end of a sentence, [SEP] token is inserted.

- That token is followed by a sentence embedding indicating whether it is sentence A or sentence B. In theory, sentence embedding is identical to token embedding with vocabulary.

- To indicate their location in the series, a positional embedding is attached to each token. The Transformer paper discusses the theory and implementation of positional embedding.

- following steps are performed to predict whether the second sentence is connected to the first one:

- The entire sequence of inputs goes through the model of the Transformer.

- The [CLS] token output is transformed into a 2*1 shaped vector using a simple layer of classification (learned weight and bias matrices).

- And the last step is calculating the probability of IsNextSequence with SoftMax.

Masked LM and Next Sentence Prediction are trained together when training the BERT model, to minimize the two strategies’ combined loss function.

So, that’s how it works, but what can you gain out of it?

Okay, we can do a much better job by applying BERT models to both the ranking and the featured snippets in search to help you find useful information.

BERT will help Search better understand nine out of 10 searches in English in the U.S., and it is believed over time, it will expand in more languages and places.

Current trends and future scope of BERT:

By learning from its current working model in English, the updated software is going to come up in 2 dozen countries.

- The researchers are being held with software up-gradation as well as hardware complementing the technology.

- Significant improvements in languages like Korean, Hindi and Portuguese are letting experts trying new areas for the implication of BERT.

- With the BERT model, we can better understand the actual question or information asked and serve better.

More training steps have resulted in higher accuracy with sufficient training data. For example, when trained on 1 M steps (128,000 words batch size), the BERT base accuracy improves by 1.0 percent compared to 500 K steps with the same batch size for the MNLI task.